The intersection of music and emotion has long fascinated scientists, artists, and technologists alike. In recent years, the concept of dynamic music emotion mapping has emerged as a groundbreaking field that blends affective computing with audio engineering. This innovative approach seeks to create adaptive soundscapes that respond in real-time to human emotional states, opening new possibilities for therapeutic applications, immersive entertainment, and even workplace productivity enhancement.

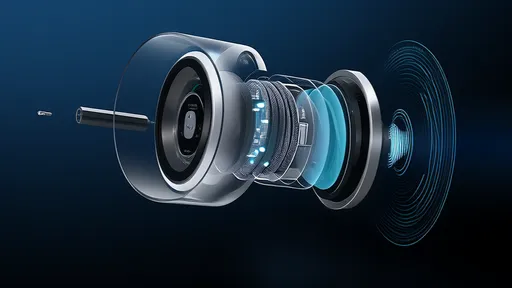

At its core, dynamic emotion mapping relies on sophisticated algorithms that analyze both musical characteristics and physiological signals. The tempo, harmony, and timbre of music each carry distinct emotional signatures – a fact composers have intuitively understood for centuries. What makes this technology revolutionary is its ability to quantify these relationships and adjust musical parameters instantaneously based on biometric feedback like heart rate variability, skin conductance, or facial microexpressions.

Several research institutions have made significant strides in developing emotion-aware music systems. The MIT Media Lab's Affective Computing Group has demonstrated how machine learning models can predict emotional responses to music with surprising accuracy. Their systems analyze over fifty musical features – from spectral centroid to rhythmic regularity – and correlate them with self-reported emotional states across diverse listener populations. This data-driven approach moves beyond simplistic "happy-sad" dichotomies to capture nuanced emotional experiences.

The therapeutic potential of emotionally adaptive music is particularly compelling. Clinical studies have shown promising results in using dynamic music mapping for anxiety reduction, pain management, and mood regulation. Unlike static playlists, these systems create personalized auditory experiences that evolve with the patient's changing emotional needs. A patient experiencing a panic attack might automatically hear music that gradually shifts from matching their heightened arousal to guiding them toward calmness through carefully modulated tempo and harmonic progression.

Entertainment applications are pushing the boundaries of immersive experiences. Video game developers now experiment with soundtracks that adapt not just to in-game events but to players' emotional states measured through webcams or wearable devices. Imagine a horror game where the musical score intensifies precisely when your pulse quickens, or a meditation app that subtly adjusts ambient tones as your breathing patterns change. These implementations raise fascinating questions about the ethics of emotional manipulation through sound.

Technological challenges remain in creating seamless, unobtrusive emotion detection systems. Current methods often rely on cumbersome sensors or imperfect camera-based emotion recognition. The next generation of dynamic music mapping aims to incorporate multimodal sensing – combining voice analysis, typing patterns, and even environmental context to infer emotional states more naturally. Privacy concerns also loom large as these systems potentially gain access to intimate biometric data.

From a creative perspective, dynamic emotion mapping challenges traditional notions of musical authorship. When a composition continuously reshapes itself in response to listener physiology, who is the true creator – the original composer, the algorithm, or the listener themselves? Some avant-garde musicians embrace this ambiguity, crafting "living compositions" that exist as potentialities rather than fixed works. Others worry about the erosion of intentional artistic expression in favor of algorithmic crowd-pleasing.

The business implications are equally profound. Streaming platforms already use emotion-based recommendation systems, but dynamic mapping could enable real-time playlist optimization throughout your day. Your workout playlist might automatically ramp up intensity when it senses your energy flagging, or your focus playlist could introduce certain frequencies when it detects waning concentration. Such capabilities could redefine music consumption from a passive to an interactive experience.

Looking ahead, the convergence of dynamic music mapping with augmented reality and brain-computer interfaces suggests even more transformative applications. Preliminary experiments with EEG-based music generation show tantalizing possibilities for direct neural soundscapes. While these technologies remain in early stages, they point toward a future where the boundary between music we listen to and music we experience becomes increasingly blurred.

As with any powerful technology, dynamic music emotion mapping demands thoughtful implementation. The same systems that can alleviate suffering through therapeutic soundscapes could potentially be weaponized for manipulation or mood control. Establishing ethical frameworks and user protections will be crucial as these tools mature. What remains undeniable is that we stand at the threshold of a new era in musical experience – one where compositions don't just convey emotion, but converse with it.

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025